- WAN AI Video Generator Blog - AI Video Creation Guides & Updates

- Alibaba Z-Image 2026 Update: Open-Source AI Image Generation Milestone

Alibaba Z-Image 2026 Update: Open-Source AI Image Generation Milestone

Alibaba Z-Image 2026 Update: A Milestone in Efficient, Open-Source AI Image Generation

In late January 2026, Alibaba's Tongyi Lab made a major update to its groundbreaking image generation foundation model Z-Image, marking a new chapter in high-quality, resource-efficient generative AI. This upgrade is part of a broader wave of innovation in AI image models — especially those that balance performance, speed, and accessibility. In this article, we'll explore what's new in the Z-Image 2026 release, how it compares with its prior versions and competing models, and what this means for developers, creatives, and enterprises worldwide.

Ready to try Z-Image right now? Experience Z-Image for free and see how it compares to other leading AI image generation tools. Want to explore even more image generation models? Try Pollo AI's diverse image generators.

What Is Z-Image? A Quick Recap

Originally released by Alibaba's Tongyi Lab in late 2025 as an open-source image generation model, Z-Image was built with efficiency and accessibility in mind. Unlike models with tens of billions of parameters, Z-Image uses a 6-billion-parameter architecture, making it much more suitable for consumer-level hardware and wider adoption.

The model uses a novel architecture known as the Scalable Single-Stream Diffusion Transformer (S3-DiT), which concatenates text tokens, image tokens, and semantic representations into a unified sequence. This design improves both computational efficiency and quality, particularly in text rendering — a known weak spot in many older models.

Z-Image: Foundation Model of the Image Family

According to the official release, Z-Image is positioned as the foundation model of the entire Image family, engineered for:

- Good quality

- Robust generative diversity

- Broad stylistic coverage

- Precise prompt adherence

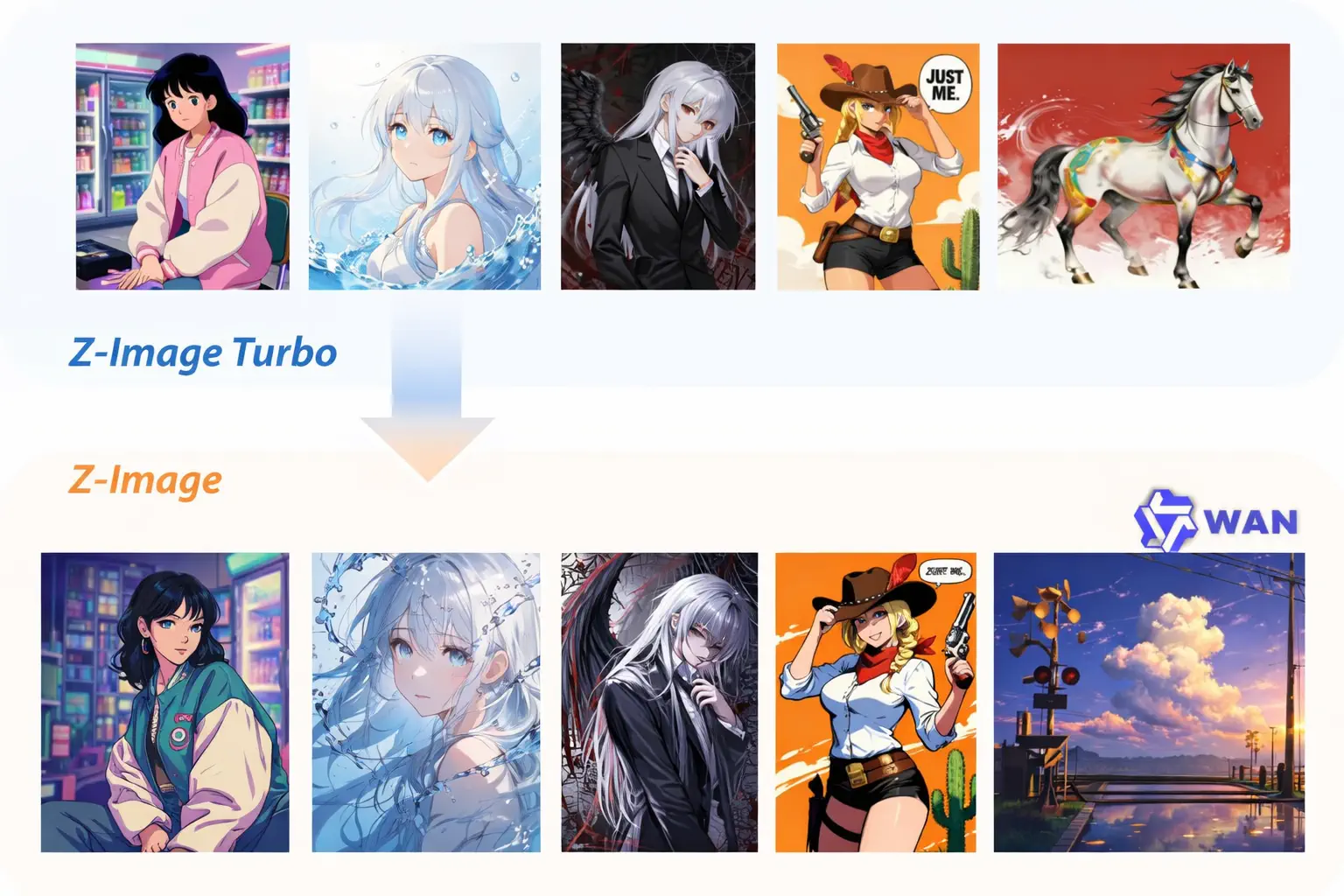

While Z-Image-Turbo is built for speed, Z-Image Base is a full-capacity, undistilled transformer designed for creators, researchers, and developers who require the highest levels of creative freedom and controllability.

What's New in the 2026 Z-Image Update

The January 27, 2026 Z-Image update focused on expanding the model's availability and capabilities, reinforcing Alibaba's commitment to open-source AI.

1. Open-Sourced Foundation Model

The biggest highlight is the formal public release of the Z-Image foundation model (6B parameters) on platforms like GitHub, Hugging Face, and ModelScope. This means developers and researchers can now access the full model weights, configuration files, and fine-tuning tools without restrictive licensing.

This change enables:

- Commercial and research use under permissive licensing

- Fine-tuning with LoRA and ControlNet

- Community-driven innovation with adapters and utilities

2. Model Family Expansion (Turbo, Base & Edit)

Although the original release focused on Z-Image-Turbo, the update clarifies the roadmap:

| Variant | Description |

|---|---|

| Z-Image Turbo | Ultra-fast generation with strong quality |

| Z-Image Base | Undistilled full model preserving complete training signal for CFG and professional use |

| Z-Image Edit | Planned version optimized for editing tasks such as inpainting and semantic adjustments |

Base's undistilled nature supports full Classifier-Free Guidance (CFG) and makes it a strong foundation for professional workflows.

3. Enhanced Accessibility and Deployment Options

The 2026 release also emphasizes ease of use with:

- Local deployment via Diffusers + Python

- ComfyUI integration for visual workflows

- Quantized GGUF builds for operation on lower-end GPUs

Notably, Z-Image Turbo has been integrated into Ollama, a Mac app for local model execution that supports photorealistic image generation and English/Chinese text rendering.

Z-Image vs Competitors: Strengths and Weaknesses

To understand the impact of Z-Image's 2026 update, here's a structured comparison with leading models:

Comparison Table: Z-Image vs SDXL vs FLUX vs Midjourney

| Feature | Z-Image (Base & Turbo) | Stable Diffusion XL (SDXL) | FLUX.2 | Midjourney v7 |

|---|---|---|---|---|

| Parameters | ~6B efficient architecture | ~20B+ | ~24B-32B+ | Proprietary |

| Open Source | ✅ Apache 2.0 | ✅ | Partially / mixed | ❌ Proprietary |

| Prompt Adherence | Excellent | Good | Very Good | Excellent artistic |

| Text Rendering | Strong bilingual | Weak-moderate | Moderate | Moderate |

| Speed (Inference) | Very Fast (Turbo) | Moderate | Slow-Moderate | Moderate |

| Local Deployment | ✅ Yes | ✅ Yes | ✅ Yes | ❌ Limited/No |

| Stylistic Diversity | Wide | Very Wide | Very Wide | Exceptional |

| Commercial API | Variable | ✅ Yes | ✅ Yes | ✅ Yes |

| Best For | Efficient, prompt-controlled generation | General use | Quality & style | Artistic & aesthetic focus |

Z-Image vs Stable Diffusion XL

Stable Diffusion XL remains widely used, but Z-Image offers:

- Better prompt adherence

- Faster inference with Turbo

- More modern architecture for text-image tasks

SDXL retains an edge in tooling maturity and ecosystem but Z-Image's efficiency and open nature accelerate adoption.

Z-Image vs FLUX.2

FLUX.2, developed by Black Forest Labs, is a high-parameter model with strong artistic capabilities and broader stylistic range.

Where Z-Image excels:

- Speed and cost efficiency

- Open-source licensing

- Strong prompt control & bilingual text rendering

FLUX.2 may produce richer artistic styles in some creative workflows, but its resource demands are significantly higher.

Z-Image vs Midjourney

Midjourney, especially v7, remains a favorite for aesthetic and stylistic generation in artistic communities.

However:

- Midjourney is proprietary, limiting custom model integration

- Z-Image allows local deployment and custom training

For developers and enterprises needing controllable and open solutions, Z-Image presents a strong alternative.

👉 Try Z-Image now: Free Z-Image Generator | Explore more AI image models

Technical Deep Dive: Core Design of Z-Image

1. Scalable Single-Stream Diffusion Transformer (S3-DiT)

This architecture unifies text and visual processing, improving efficiency and bilingual text rendering. The S3-DiT concatenates:

- Text tokens from prompts

- Image tokens from the diffusion process

- Semantic representations for context understanding

2. Knowledge Distillation for Turbo Variant

Turbo uses advanced distillation to achieve sub-second inference on supported hardware while maintaining high visual fidelity.

3. Quantization Support

Quantized builds (GGUF) make Z-Image executable on lower VRAM GPUs, broadening accessibility without cloud-only dependency.

| Format | VRAM Required | Use Case |

|---|---|---|

| Full Precision | 16GB+ | Maximum quality |

| FP16 | 8-12GB | Balanced performance |

| GGUF Quantized | 4-8GB | Consumer hardware |

4. Undistilled Architecture & Full CFG

Z-Image Base preserves the complete training signal and supports full Classifier-Free Guidance, enabling precise control for complex prompts and professional workflows.

Community and Ecosystem Impact

Since the update, community resources for Z-Image have proliferated. On platforms like Civitai, users are sharing adapters, LoRAs, and workflows. This community growth parallels the early Stable Diffusion ecosystem but with a focus on open source and prompt precision.

Real Use Cases

Developers and creators are using Z-Image for:

| Use Case | Benefits |

|---|---|

| Rapid prototyping of visual concepts | Fast iteration with Turbo variant |

| Custom brand assets with bilingual prompts | English/Chinese text rendering |

| Automated illustration pipelines | API integration support |

| Local, offline creative tools | Privacy and cost savings |

Frequently Asked Questions

Q: Is Z-Image free to use?

A: Yes, Z-Image is released under Apache 2.0 license, allowing both commercial and personal use. You can also try it for free on our platform.

Q: What hardware do I need to run Z-Image locally?

A: With quantized GGUF builds, you can run Z-Image on GPUs with as little as 4-8GB VRAM. For full precision, 16GB+ is recommended.

Q: How does Z-Image compare to Midjourney for artistic work?

A: Midjourney excels in aesthetic styling, while Z-Image offers better prompt control and open-source flexibility. Z-Image is ideal for developers who need customizable, deployable solutions.

Q: Can I fine-tune Z-Image for my specific use case?

A: Yes, Z-Image supports fine-tuning with LoRA and ControlNet, making it highly adaptable for custom workflows.

Q: Where can I try different AI image generation models?

A: You can try Z-Image for free on our platform, or explore multiple image generation models on Pollo AI.

Getting Started

For Developers and Researchers:

Z-Image is the clear winner with its open-source licensing, efficient architecture, and full CFG support. Download from Hugging Face, GitHub, or ModelScope and integrate into your pipeline.

For Creators and Artists:

Try Z-Image without any setup:

- 👉 Free Z-Image Generator - No installation required

- 👉 Explore More AI Image Models - Compare different styles and capabilities

For Enterprises:

Z-Image's permissive licensing and local deployment options make it ideal for businesses requiring data privacy and customization.

Conclusion

The Z-Image 2026 update from Alibaba Tongyi Lab represents a meaningful evolution in AI image generation: an open-source model that combines speed, accessibility, and quality in a package practical for both individuals and enterprises.

By open-sourcing the foundation model, expanding tooling support, and clarifying the path toward advanced variants, Alibaba has positioned Z-Image as a cornerstone in the next generation of AI art tools.

Whether you're a developer building custom solutions, a creative looking for rapid ideation tools, or a business seeking cost-effective AI capabilities, Z-Image's evolution warrants your attention.

Ready to experience the difference? Try Z-Image for free and join thousands of creators already using cutting-edge AI image generation. Want to explore even more options? Check out Pollo AI's comprehensive image generation suite.

Last updated: January 28, 2026

Free Tools

- Free Wan2.1 Video Generator

Generate videos with Wan2.1 model

- Free Wan2.2 Video Generator

More powerful Wan2.2 model

- Speech to Video Generator

Convert speech to video

- Text to Video Generator

Transform text into videos

- Image to Video Generator

Animate your images

- Z Image Generator

AI-powered image generation

- Wan Animate AI

AI-powered animation tool

Latest Posts

Wan 2.5 vs Kling 3: Best AI Video Generator Compared 2026

2 days agoWan 2.6 vs Kling 3: Which AI Video Generator Should You Choose? (2026)

2 days agoWan 2.6 Flash Complete Guide: Fast AI Video Generator 2026

11 days agoGLM-Image vs Z-Image: Next-Gen AI Image Generators Compared

20 days agoKling 2.6 Motion Control vs Wan 2.2 Animate: AI Motion Generation Comparison

22 days ago

Recommended Reading

Read More

GLM-Image vs Z-Image: Next-Gen AI Image Generators Compared

A comprehensive comparison of GLM-Image and Z-Image (Turbo) AI image generators. Discover their architectures, performance benchmarks, and best use cases for your creative projects.

Wan 2.6 vs Sora 2: A Comprehensive Comparison of Next-Gen AI Video Models (2025)

Complete comparison of Wan 2.6 and Sora 2 AI video generators. Discover which model excels in realism, audio sync, narrative structure, and cost efficiency. Make the right choice for your creative projects in 2025.

Wan 2.5 vs Kling 3: Best AI Video Generator Compared 2026

Wan 2.5 vs Kling 3 head-to-head comparison — features, motion quality, audio, pricing, and real use cases. Find out which AI video generator fits your workflow and try both free.

Wan 2.6 vs Kling 3: Which AI Video Generator Should You Choose? (2026)

Wan 2.6 vs Kling 3 comparison guide. Compare specs, features, availability, and real-world performance. Discover which AI video generator fits your workflow best.